Introduction

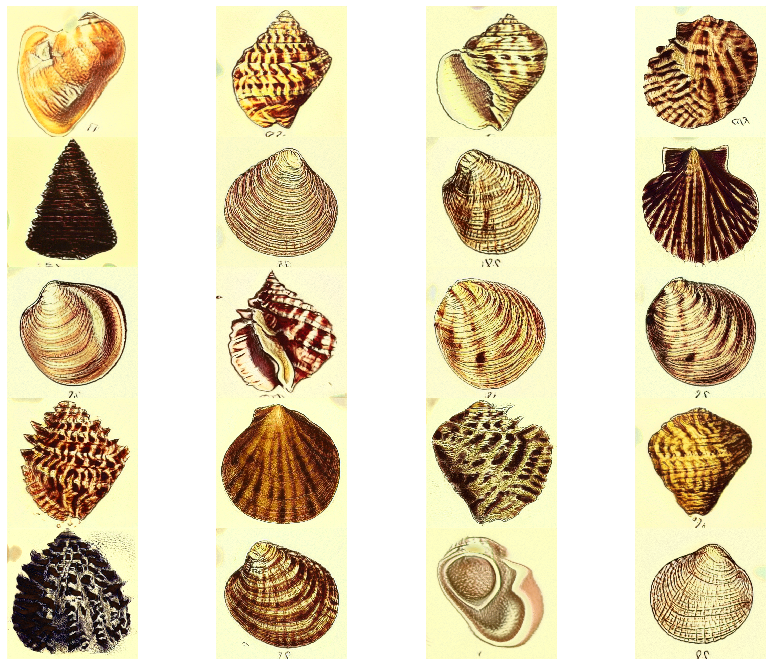

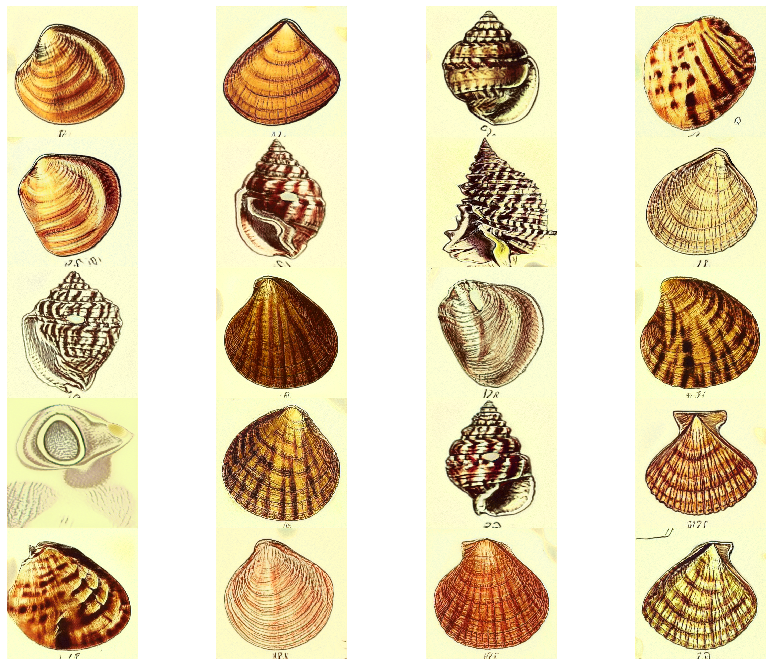

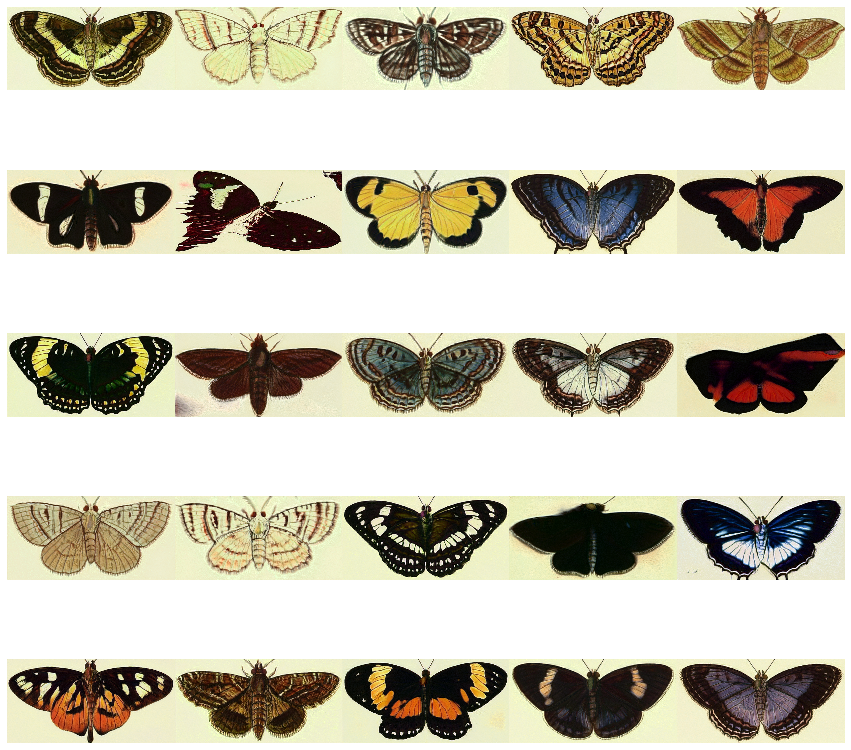

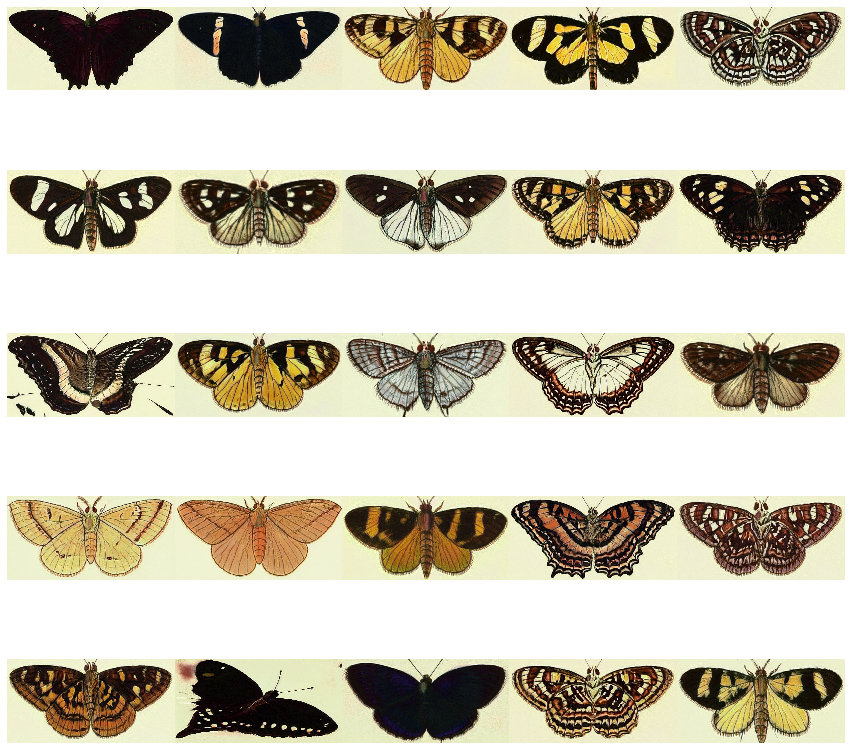

A month or so ago we learnt from an article titled Over 150,000 Botanical Illustrations Enter the Public Domain that the Biodiversity Heritage Library (BHL) released a large number of high resolution hand-drawn illustrations of nature for free! The Flickr collection is beautiful, and rather handily divided into albums containing similar images. Inspired by this beetle generator, we picked out a couple of albums with illustrations on the same subject and made ourselves some generators too. Here’s some examples of the generated illustrations:

Requirements

a GPU

We have one but the notebook upon which this is based runs in Google Colab, and there’s a Kaggle version too. Ours took 2 hrs/tick, the Colab one supposedly takes around 6hrs/tick, and Kaggle’s is said to be faster than that. We did around 6 ticks (overnight and then some), got impatient after that and the results already look pretty cool.

Libraries

numpyandscipyfor picking out individual objects from pages of illustrationsAugmentorfor resizing imagestensorflowfor running StyleGANgdownfor downloading the trained StyleGAN model

Picking out individuals

The first step is to make a nice large training set of individual shells / butterflies / what have you. The BHL illustrations generally have more than one object on a page though, so we need some code to extract each thing into its own image. We did this using scipy‘s image segmentation functions.

For each page of illustrations we

- binarized the page by setting pixels with a mean value > 125 to 1 else 0

- performed

binary_dilationwhich extends patches of 1s - performed

binary_fill_holeswhich does what it says on the tin - and then

binary_erosionwhich erodes it back so that objects which are close together aren’t seen as the same one. - and finally

labelandfind_objectswhich label each object separately and return bounding boxes around each one resp. We add a bit back to each bounding box to make up for the erosion.

from pathlib import Path

import numpy as np

from scipy import ndimage

def extract_objects(image, max_binary=125):

"""

Extracts objects from an image

:param image:

:param max_binary: if the mean is < this number then it's 0 else 1

:return: list of images with one object each in them

"""

image_mean = image.mean(axis=-1)

image_binary = np.where(image_mean < max_binary, 1, 0)

image_binary = ndimage.morphology.binary_dilation(image_binary, structure=np.ones((8, 8)))

image_binary = ndimage.morphology.binary_fill_holes(image_binary, structure=np.ones((3, 3)))

image_binary = ndimage.morphology.binary_erosion(image_binary, structure=np.ones((24, 24)))

labels = ndimage.label(image_binary)

add_back = 24 - 8

def alter_bbox(label):

return (slice(max(0, label[0].start - n), min(image.shape[0], label[0].stop + n)),

slice(max(0, label[1].start - n), min(image.shape[1], label[1].stop + n)))

bboxes = [alter_bbox(x) for x in ndimage.find_objects(labels[0])]

return [image[bbox] for bbox in bboxes]

input_folder = Path("butterflies/raw")

output_folder = Path("butterflies/processed")

i = 0

for page_file in input_folder.glob("*.jpg"):

page = plt.imread(page_file)

extracted_images = extract_objects(page)

for im in extracted_images:

plt.imsave(str(output_folder / f"{i}.jpg"), im)

i += 1

Then we used the Augmentor library to squash them all into the same size, 512 x 512 - the size StyleGAN works with. Augmentor also lets you do other kinds of transformations like rotations, which would help the network be invariant to rotations but we didn’t experiment with this yet.

import Augmentor

pipeline = Augmentor.Pipeline("butterflies/processed", "augmented", save_format="JPEG")

pipeline.resize(probability=1.0, width=512, height=512)

pipeline.process()

Transfer learning with StyleGAN

To train a StyleGAN network from scratch, the official tensorflow library cheerfully tells us to wait a couple of weeks. Instead we started with a network already trained on human faces (hence the transfer learning). You may think this would be a pretty bad start since human faces are pretty different from shells and butterflies, but it’s pretty nice to have a network which already knows about shading and edges and such. We followed this notebook that also works on Google Colab, skipping all the portrait-specific stuff.

The notebook also has some code to make videos that transition between different objects in the latent space:

Next

We should really let these train longer to get better quality pictures but they look cool and the GPU fan is noisy. Another idea is to make a dataset of all illustrations, not just of the same subject, and see where that leads us.